3.2. Part 2: creating and importing image features¶

Within GridFix, a “feature” defines a two-step transformation on an image and an associated RegionSet. In the first step, each input image may be transformed in a way that is useful for the respective analysis, such as generating a contrast map or edge density image. The resulting feature map (which is stored and processed as a numpy array) is then summarized using the RegionSet so that one feature value per region is returned.

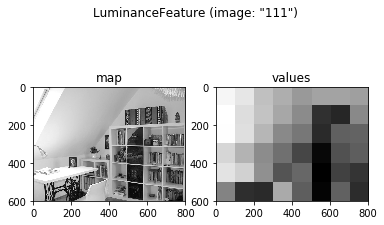

As an example, the most simple feature object is LuminanceFeature, which transforms an RGB image into a greyscale luminance image (using the same process as Matlab’s rgb2gray.m) and then returns the mean luminance value for each region of interest / grid cell.

In [7]:

# Import GridFix toolbox and related modules

%matplotlib inline

import numpy as np

import matplotlib as mp

import matplotlib.pyplot as plt

from gridfix import *

Loading data: In oder to be able to create and import various image features, we will first load the same example images used in part 1 and again define a 8-by-6 grid for evaluation. If we leave out all the plotting and example code, that’s just two lines of Python code:

In [8]:

images = ImageSet('images/tutorial_images.csv', label='tutorial')

grid = GridRegionSet(size=images.size, gridsize=(8,6), label='testgrid')

3.2.1. 1. Creating features from images¶

GridFix has some built-in image features than can be used directly on

input images. To illustrate, let’s create the aforementioned

LuminanceFeature for our input images and the previously defined grid.

print()ing a Feature object will show details on the Feature

itself as well as the associated datasets.

In [9]:

fLum = LuminanceFeature(grid, images)

print(fLum)

<gridfix.LuminanceFeature, length=48>

Regions:

<gridfix.GridRegionSet (testgrid), size=(800, 600), 8x6 grid, 48 cells, memory=22500.0 kB>

Images:

<gridfix.ImageSet "tutorial", 15 images, size=(800, 600)>

A feature’s main result is a vector of feature values whose length

depends on the associated RegionSet (i.e., one value per region). In our

example, the newly defined feature fLum has a length of 48, one mean

luminance value for each grid cell. To see the feature values for a

feature object, apply() it to one of the imageids in the set (as

in the previous tutorial, feel free to change the imageid and observe

the result):

In [12]:

example_img = '111'

fLum.apply(example_img)

Out[12]:

array([ 0.93599965, 0.89392132, 0.79004721, 0.74111512, 0.68258492,

0.71182295, 0.71191775, 0.7004852 , 0.96326098, 0.86776976,

0.80081014, 0.72126652, 0.64451908, 0.39865884, 0.36996616,

0.6401726 , 0.96006093, 0.87535547, 0.76301469, 0.63979265,

0.58886162, 0.37999733, 0.52692699, 0.53575212, 0.84881097,

0.75357533, 0.64711389, 0.57149906, 0.45624284, 0.28651496,

0.48697679, 0.52280946, 0.88639214, 0.83512268, 0.65783505,

0.49580753, 0.54547947, 0.2634846 , 0.44386838, 0.40858254,

0.62477865, 0.39145541, 0.37857847, 0.7307231 , 0.52127252,

0.27579 , 0.52870886, 0.38594558])

The vector of length 48 displayed above will later be incorporated into the GLMM predictor matrix. However, the pure values are not very intuitive when exploring data. Therefore, all Feature() objects support plotting, which will apply the feature to the specified imageid and then (by default) display both the resulting feature map and the accompanying RegionSet, with each region shaded according to it’s feature value (normalized to the full display range of 0..255 by default).

In [13]:

fLum.plot(example_img)

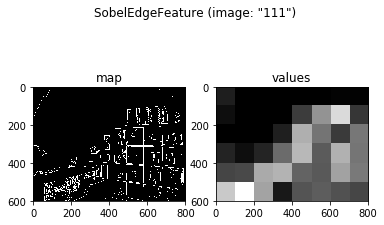

Now that we know how to define a feature based on an ImageSet, let’s add another feature, this time investigating the edge density map returned by the Sobel edge detector. Feature values here are defined as the proportion of edges in each region / grid cell (because the edge map is binarized, this is equivalent to the mean of each region):

In [14]:

fEdge = SobelEdgeFeature(grid, images)

fEdge.plot(example_img)

3.2.2. 2. Using pre-generated feature maps¶

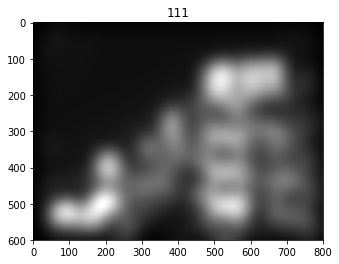

GridFix can, of course, also load predefined feature maps directly from image- or MATLAB files, such as the output maps generated by a saliency model or image segmentation algorithm. In this example, we will use saliency maps created by the well-known saliency model by Itti, Koch & Niebur (1998) [2].

For external feature maps, typically each map corresponds to a single

image from the fixation dataset. Therefore, an intuitive way to

represent a collection of feature maps is as another ImageSet object

(internally, ImageSets are a collection of high-precision floating point

matrices). The following example shows a way to load files for an

ImageSet that is different from the example in part 1: here, we load a

folder containing .mat files and explicitly specify the imageids to

assign to each image in the folder. The attribute mat_var='IKN98'

tells the software to load the IKN98 matrix from the specified MATLAB

file, allowing to store multiple input maps in the same file:

In [18]:

ids = ['112', '67', '6', '52', '37', '106', '129', '9', '107', '97', '58', '111', '85', '149', '150']

maps = ImageSet('maps', imageids=ids, mat_var='IKN98')

maps.plot(example_img)

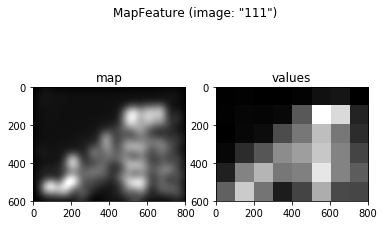

Now that the IKN98 feature maps have been loaded, we can create a

feature to assign each region (grid cell) a unique value based on the

input feature map. The corresponding Feature object is called

MapFeature. This feature object does not transform the input map in

any way and simply applies a statistical function to each region. The

function to use can be specified in the stat= parameter (default is

stat=np.mean to return the mean feature value per region). All numpy

statistics functions work, such as mean, std, median and so

on, but this feature will also accept any other function that can

process an array and return a scalar. In our example, the following code

creates a MapFeature which returns the mean saliency map value in each

grid cell:

In [19]:

fIKN = MapFeature(grid, maps, stat=np.mean)

fIKN.plot(example_img)

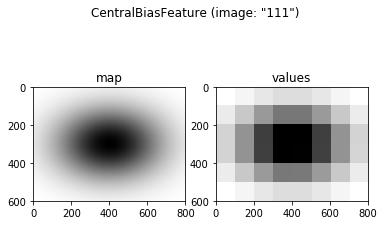

3.2.3. 3. A special case: the Central Viewing Bias “feature”¶

When viewing a naturalistic scene on a computer screen, human observers

exhibit a strong bias towards the center of the image, even when the

center of the image does not even contain the most salient image

features or the most information [3]. Even though the assumption of

higher fixation probabilities for more central regions is not

technically a feature of the input image, central viewing bias can be

modeled in GridFix as a special Feature() object, the

CentralBiasFeature. This object type returns the distance between

image center and each region’s center of gravity (CoG) as its feature

values, using one of the following models of central viewing bias:

- Euclidean distance in pixels

- Gaussian distance measure as proposed in [4], with adjustable \(\sigma^2\) and \(\nu\) parameters

- Manhattan- / Taxicab metric (see https://en.wikipedia.org/wiki/Taxicab_geometry)

A CentralBiasFeature can be defined and plotted like any other feature (note that only some variants create an actual feature map, which is not needed for e.g. euclidean distance). For this example, we will use the “gaussian” distance measure, keeping the default parameters suggested by Clarke and Tatler in [3]. As always, you can try other values and observe how they change the feature map.

In [20]:

fCent = CentralBiasFeature(grid, images, measure='gaussian', sig2=0.23, nu=0.45)

print(fCent)

fCent.plot(example_img)

<gridfix.CentralBiasFeature, length=48, measure "gaussian", sig2=0.23, nu=0.45>

Regions:

<gridfix.GridRegionSet (testgrid), size=(800, 600), 8x6 grid, 48 cells, memory=22500.0 kB>

Images:

<gridfix.ImageSet "tutorial", 15 images, size=(800, 600), normalized>

3.2.4. 4. Concluding Remarks¶

This concludes the second part of our GridFix tutorial. Since we now know how to create and import the image features we want to compare to our participants’ fixation data, the next step in this tutorial will explain how to combine all these puzzle pieces into a GLMM predictor matrix and run the resulting model in R.

3.2.5. References¶

[1] Nuthmann, A., & Einhäuser, W. (2015). A new approach to modeling the influence of image features on fixation selection in scenes. Annals of the New York Academy of Sciences, 1339(1), 82-96. http://dx.doi.org/10.1111/nyas.12705

[2] Itti, L., Koch, C. & Niebur, E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis & Machine Intelligence (11), 1254-1259. http://dx.doi.org/10.1109/34.730558

[3] Tatler, B. W. (2007). The central fixation bias in scene viewing: selecting an optimal viewing position independently of motor biases and image feature distributions. J Vis 7(14), 411-417. http://dx.doi.org/10.1167/7.14.4

[4] Clarke, A. D. F. & Tatler, B. W. (2014). Deriving an appropriate baseline for describing fixation behaviour. Vision Res 102, 41-51. http://dx.doi.org/10.1016/j.visres.2014.06.016